A Choice-of-Law Alternative to Federal Preemption of State Privacy Law

Executive Summary A prominent theme in debates about US national privacy legislation is whether federal law should preempt state law. A federal statute could create . . .

Executive Summary

A prominent theme in debates about US national privacy legislation is whether federal law should preempt state law. A federal statute could create one standard for markets that are obviously national in scope. Another approach is to allow states to be “laboratories of democracy” that adopt different laws so they can discover the best ones.

We propose a federal statute requiring states to recognize contractual choice-of-law provisions, so companies and consumers can choose what state privacy law to adopt. Privacy would continue to be regulated at the state level. However, the federal government would provide for jurisdictional competition among states, such that companies operating nationally could comply with the privacy laws of any one state.

Our proposed approach would foster a double competition aimed at discerning and delivering on consumers’ true privacy interests: market competition to deliver privacy policies that consumers prefer and competition among states to develop the best privacy laws.

Unlike a single federal privacy law, this approach would provide 50 competing privacy regimes for national firms. The choice-of-law approach can trigger competition and innovation in privacy practices while preserving a role for meaningful state privacy regulation.

Introduction

The question of preemption of state law by the federal government has bedeviled debates about privacy regulation in the United States. A prominent theme is to propose a national privacy policy that largely preempts state policies to create one standard for markets that are obviously national. Another approach is to allow states to be “laboratories of democracy” that adopt different laws, with the hope that they will adopt the best rules over time. Both approaches have substantial costs and weaknesses.

The alternative approach we propose would foster a double competition aimed at discerning and delivering on consumers’ true privacy interests: market competition to deliver privacy policies that consumers prefer and competition among states to develop the best privacy laws. Indeed, our proposal aims to obtain the best features—and avoid the worst features—of both a federal regime and a multistate privacy law regime by allowing firms and consumers to agree on compliance with the single regime of their choosing.

Thus, we propose a federal statute requiring states to recognize contractual choice-of-law provisions, so companies and consumers can choose what state privacy law to adopt. Privacy would continue to be regulated at the state level. However, the federal government would provide for jurisdictional competition among states, and companies operating nationally could comply with the privacy laws of any one state.

Unlike a single federal privacy law, this approach would provide 50 competing privacy regimes for national firms. Protecting choice of law can trigger competition and innovation in privacy practices while preserving a role for meaningful state privacy regulation.

The Emerging Patchwork of State Privacy Statutes Is a Problem for National Businesses

A strong impetus for federal privacy legislation is the opportunity national and multinational businesses see to alleviate the expense and liability of having a patchwork of privacy statutes with which they must comply in the United States. Absent preemptive legislation, they could conceivably operate under 50 different state regimes, which would increase costs and balkanize their services and policies without coordinate gains for consumers. Along with whether a federal statute should have a private cause of action, preempting state law is a top issue when policymakers roll up their sleeves and discuss federal privacy legislation.

But while the patchwork argument is real, it may be overstated. There are unlikely ever to be 50 distinct state regimes; rather, a small number of state legislation types is likely, as jurisdictions follow each other’s leads and group together, including by promulgating model state statutes.[1] States don’t follow the worst examples from their brethren, as the lack of biometric statutes modeled on Illinois’s legislation illustrates.[2]

Along with fewer “patches,” the patchwork’s costs will tend to diminish over time as states land on relatively stable policies, allowing compliance to be somewhat routinized.

Nonetheless, the patchwork is far from ideal. It is costly to firms doing business nationally. It costs small firms more per unit of revenue, raising the bar to new entry and competition. And it may confuse consumers about what their protections are (though consumers don’t generally assess privacy policies carefully anyway).

But a Federal Privacy Statute Is Far from Ideal as Well

Federal preemption has many weaknesses and costs as well. Foremost, it may not deliver meaningful privacy to consumers. This is partially because “privacy” is a congeries of interests and values that defy capture.[3] Different people prioritize different privacy issues differently. In particular, the elites driving and influencing legislation may prioritize certain privacy values differently from consumers, so legislation may not serve most consumers’ actual interests.[4]

Those in the privacy-regulation community sometimes assume that passing privacy legislation ipso facto protects privacy, but that is not a foregone conclusion. The privacy regulations issued under the Gramm-Leach-Bliley Act (concerning financial services)[5] and the Health Insurance Portability and Accountability Act (concerning health care)[6] did not usher in eras of consumer confidence about privacy in their respective fields.

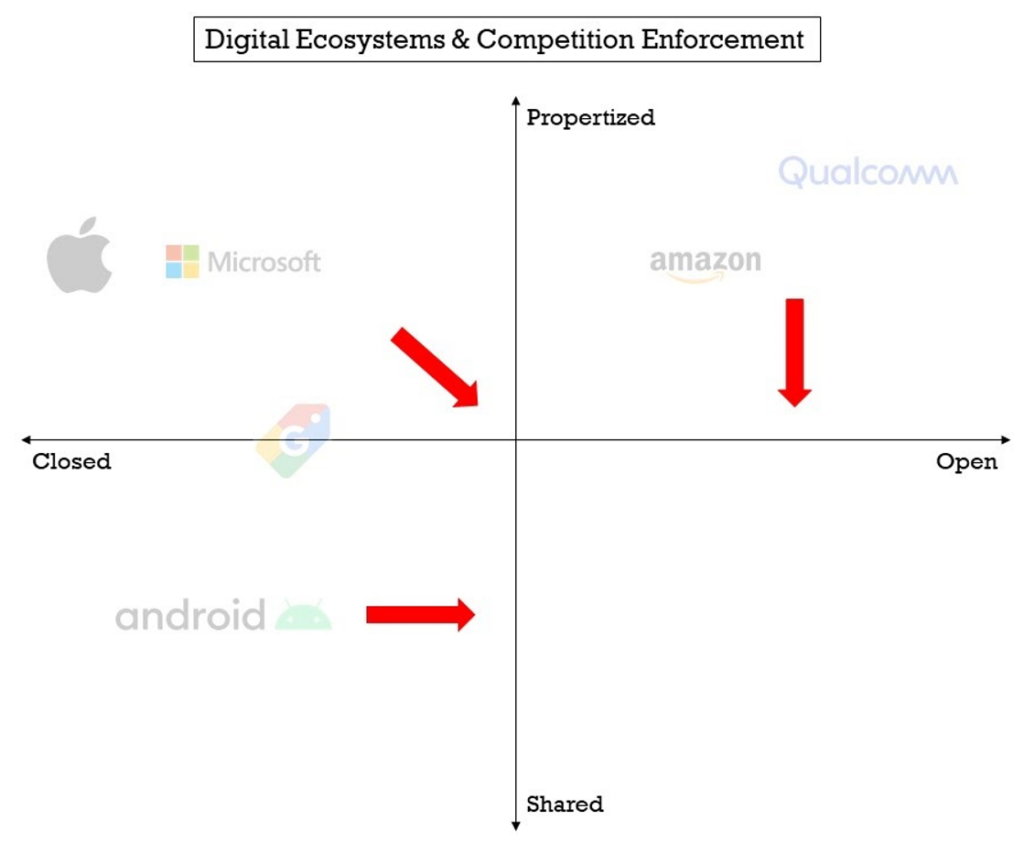

The short-term benefits of preempting state law may come with greater long-term costs. One cost is the likely drop in competition among firms around privacy. Today, as some have noted, “Privacy is actually a commercial advantage. . . . It can be a competitive advantage for you and build trust for your users.”[7] But federal privacy regulation seems almost certain to induce firms to treat compliance as the full measure of privacy to offer consumers. Efforts to outperform or ace out one another will likely diminish.[8]

Another long-term cost of preempting state law is the drop in competition among states to provide well-tuned privacy and consumer-protection legislation. Our federal system’s practical genius, which Justice Louis Brandeis articulated 90 years ago in New State Ice v. Liebmann, is that state variation allows natural experiments in what best serves society—business and consumer interests alike.[9] Because variations are allowed, states can amend their laws individually, learn from one another, adapt, and converge on good policy.

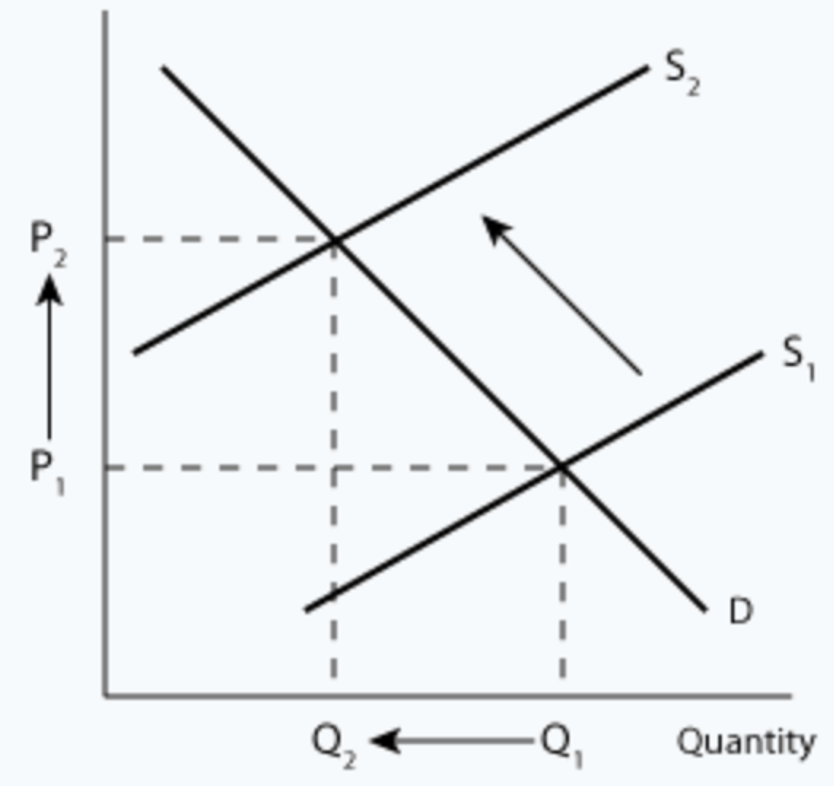

The economic theory of federalism draws heavily from the Tiebout model.[10] Charles Tiebout argued that competing local governments could, under certain conditions, produce public goods more efficiently than the national government could. Local governments act as firms in a marketplace for taxes and public goods, and consumer-citizens match their preferences to the providers. Efficient allocation requires mobile people and resources, enough jurisdictions with the freedom to set their own laws, and limited spillovers among jurisdictions (effects of one jurisdiction’s policies on others).

A related body of literature on “market-preserving federalism” argues that strong and self-reinforcing limits on national and local power can preserve markets and incentivize economic growth and development.[11] The upshot of this literature is that when local jurisdictions can compete on law, not only do they better match citizens’ policy preferences, but the rules tend toward greater economic efficiency.

In contrast to the economic gains from decentralization, moving authority over privacy from states to the federal government may have large political costs. It may deepen Americans’ growing dissatisfaction with their democracy. Experience belies the ideal of responsive national government when consumers, acting as citizens, want to learn about or influence the legislation and regulation that governs more and more areas of their lives. The “rejectionist” strain in American politics that Donald Trump’s insurgency and presidency epitomized may illustrate deep dissatisfaction with American democracy that has been growing for decades. Managing a highly personal and cultural

issue like privacy through negotiation between large businesses and anonymous federal regulators would deepen trends that probably undermine the government’s legitimacy.

To put a constitutional point on it, preempting states on privacy contradicts the original design of our system, which assigned limited powers to the federal government.[12] The federal government’s enumerated powers generally consist of national public goods—particularly defense. The interstate commerce clause, inspired by state parochialism under the Articles of Confederation, exists to make commerce among states (and with tribes) regular; it is not rightly a font of power to regulate the terms and conditions of commerce generally.[13]

Preempting state law does not necessarily lead to regulatory certainty, as is often imagined. Section 230 of the Communications Decency Act may defeat once and for all the idea that federal legislation creates certainty.[14] More than a quarter century after its passage, it is hotly debated in Congress and threatened in the courts.[15]

The Fair Credit Reporting Act (FCRA) provides a similar example.[16] Passed in 1970, it comprehensively regulated credit reporting. Since then, Congress has amended it dozens of times, and regulators have made countless alterations through interpretation and enforcement.[17] The Consumer Financial Protection Bureau recently announced a new inquiry into data brokering under the FCRA.[18] That is fine, but it illustrates that the FCRA did not solve problems and stabilize the law. It just moved the jurisdiction to Washington, DC.

Meanwhile, as regulatory theory predicts, credit reporting has become a three-horse race.[19] A few slow-to-innovate firms have captured and maintained dominance thanks partially to the costs and barriers to entry that uniform regulation creates.

Legal certainty may be a chimera while business practices and social values are in flux. Certainty develops over time as industries settle into familiar behaviors and roles.

An Alternative to Preemption: Business and Consumer Choice

One way to deal with this highly complex issue is to promote competition for laws. The late, great Larry Ribstein, with several coauthors over the years, proposed one such legal mechanism: a law market empowered by choice-of-law statutes.[20] Drawing on the notion of market competition as a discovery process,[21] Ribstein and Henry Butler explained:

In order to solve the knowledge problem and to create efficient legal technologies, the legal system can use the same competitive process that encourages innovation in the private sector—that is, competition among suppliers of law. As we will see, this entails enforcing contracts among the parties regarding the applicable law. The greater the knowledge problem the more necessary it is to unleash markets for law to solve the problem.[22]

The proposal set forth below promotes just such competition and solves the privacy-law patchwork problem without the costs of federal preemption. It does this through a simple procedural regulation requiring states to enforce choice-of-law terms in privacy contracts, rather than through a heavy-handed, substantive federal law. Inspired by Butler and Ribstein’s proposal for pluralist insurance regulation,[23] the idea is to make the choice of legal regime a locus of privacy competition.

Modeled on the US system of state incorporation law, our proposed legislation would leave firms generally free to select the state privacy law under which they do business nationally. Firms would inform consumers, as they must to form a contract, that a given state’s laws govern their policies. Federal law would ensure that states respect those choice-of-law provisions, which would be enforced like any other contract term.

This would strengthen and deepen competition around privacy. If firms believed privacy was a consumer interest, they could select highly protective state laws and advertise that choice, currying consumer favor. If their competitors chose relatively lax state law, they could advertise to the public the privacy threats behind that choice. The process would help hunt out consumers’ true interests through an ongoing argument before consumers. Businesses’ and consumers’ ongoing choices— rather than a single choice by Congress followed by blunt, episodic amendments—would shape the privacy landscape.

The way consumers choose in the modern marketplace is a broad and important topic that deserves further study and elucidation. It nevertheless seems clear—and it is rather pat to observe—that consumers do not carefully read privacy policies and balance their implications. Rather, a hive mind of actors including competitors, advocates, journalists, regulators, and politicians pore over company policies and practices. Consumers take in branding and advertising, reputation, news, personal recommendations, rumors, and trends to decide on the services they use and how they use them.

That detail should not be overlooked: Consumers may use services differently based on the trust they place in them to protect privacy and related values. Using an information-intensive service is not a proposition to share everything or nothing. Consumers can and do shade their use and withhold information from platforms and services depending on their perceptions of whether the privacy protections offered meet their needs.

There is reason to be dissatisfied with the modern marketplace, in which terms of service and privacy policies are offered to the individual consumer on a “take it or leave it” basis. There is a different kind of negotiation, described above, between the hive mind and large businesses. But when the hive mind and business have settled on terms, individuals cannot negotiate bespoke policies reflecting their particular wants and needs. This collective decision-making may be why some advocates regard market processes as coercive. They do not offer custom choices to all but force individual consumers into channels cut by all.

The solution that orthodox privacy advocates offer does not respond well to this problem, because they would replace “take it or leave it” policies crafted in the crucible of the marketplace with “take it or leave it” policies crafted in a political and regulatory crucible. Their prescriptions are sometimes to require artificial notice and “choice,” such as whether to accept cookies when one visits websites. This, as experience shows, does not reach consumers when they are interested in choosing.

Choice of law in privacy competition is meant to preserve manifold choices when and where consumers make their choices, such as at the decision to transact, and then let consumers choose how they use the services they have decided to adopt. Let new entrants choose variegated privacy-law regimes, and consumers will choose among them. That does not fix the whole problem, but at least it doesn’t replace consumer choice with an “expert” one-size-fits-all choice.

In parallel to business competition around privacy choice of law, states would compete with one another to provide the most felicitous environment for consumers and businesses. Some states would choose more protection, seeking the rules businesses would choose to please privacy-conscious consumers. Others might choose less protection, betting that consumers prefer goods other than information control, such as free, convenient, highly interactive, and custom services.

Importantly, this mechanism would allow companies to opt in to various privacy regimes based on the type of service they offer, enabling a degree of fine-tuning appropriate for different industries and different activities that no alternative would likely offer. This would not only result in the experimentation and competition of federalism but also enable multiple overlapping privacy-regulation regimes, avoiding the “one-size-doesn’t-fit-all” problem.

While experimentation continued, state policies would probably rationalize and converge over time. There are institutions dedicated to this, such as the Uniform Law Commission, which is at its best when it harmonizes existing laws based on states’ experience.[24]

It is well within the federal commerce power to regulate state enforcement of choice-of-law provisions, because states may use them to limit interjurisdictional competition. Controlling that is precisely what the commerce power is for. Utah’s recent Social Media Regulation Act[25] barred enforcement of choice-of-law provisions, an effort to regulate nationally from a state capital. Federally backing contractual choice-of-law selections would curtail this growing problem.

At the same time, what our proposed protections for choice-of-law rules do is not much different from what contracts already routinely do and courts enforce in many industries. Contracting parties often specify the governing state’s law and negotiate for the law that best suits their collective needs.

Indeed, sophisticated business contracts increasingly include choice-of-law clauses that state the law that the parties wish to govern their relationship. In addition to settling uncertainty, these clauses might enable the contracting parties to circumvent those states’ laws they deem to be undesirable.[26]

This practice is not only business-to-business. Consumers regularly enter into contracts that include choice-of-law clauses—including regarding privacy law. Credit card agreements, stock and mutual fund investment terms, consumer-product warranties, and insurance contracts, among many other legal agreements, routinely specify the relevant state law that will govern.

In these situations, the insurance company, manufacturer, or mutual fund has effectively chosen the law. The consumer participates in this choice only to the same extent that she participates in any choices related to mass-produced products and services, that is, by deciding whether to buy the product or service.[27]

Allowing contracting parties to create their own legal certainty by contract would likely rankle states. Indeed, “we might expect governments to respond with hostility to the enforcement of choice-of-law clauses. In fact, however, the courts usually do enforce choice-of-law clauses.”[28] With some states trying to regulate nationally and some effectively doing so, the choice the states collectively face is having a role in privacy regulation or no role at all. Competition is better for them than exclusion from the field or minimization of their role through federal preemption of state privacy law. This proposal thus advocates simple federal legislation that preserves firms’ ability to make binding choice-of-law decisions and states’ ability to retain a say in the country’s privacy-governance regime.

Avoiding a Race to the Bottom

Some privacy advocates may object that state laws will not sufficiently protect consumers.[29] Indeed, there is literature arguing that federalism will produce a race to the bottom (i.e., competition leading every state to effectively adopt the weakest law possible), for example, when states offer incorporation laws that are the least burdensome to business interests in a way that arguably diverges from public or consumer interests.[30]

The race-to-the-bottom framing slants the issues and obscures ever-present trade-offs, however. Rules that give consumers high levels of privacy come at a cost in social interaction, price, and the quality of the goods they buy and services they receive. It is not inherently “down” or bad to prefer cheap or free goods and plentiful, social, commercial interaction. It is not inherently “up” or good to opt for greater privacy.

The question is what consumers want. The answers to that question—yes, plural—are the subject of constant research through market mechanisms when markets are free to experiment and are functioning well. Consumers’ demands can change over time through various mechanisms, including experience with new technologies and business models. We argue for privacy on the terms consumers want. The goal is maximizing consumer welfare, which sometimes means privacy and sometimes means sharing personal information in the interest of other goods. There is no race to the bottom in trading one good for another.

Yet the notion of a race to the bottom persists—although not without controversy. In the case of Delaware’s incorporation statutes, the issue is highly contested. Many scholars argue that the state’s rules are the most efficient—that “far from exploiting shareholders, . . . these rules actually benefit shareholders by increasing the wealth of corporations chartered in states with these rules.”[31]

As always, there are trade-offs, and the race-to-the-bottom hypothesis requires some unlikely assumptions. Principally, as Jonathan Macey and Geoffrey Miller discuss, the assumption that state legislators are beholden to the interests of corporations over other constituencies vying for influence. As Macey and Miller explain, the presence of a powerful lobby of specialized and well-positioned corporate lawyers (whose interests are not the same as those of corporate managers) transforms the analysis and explains the persistence and quality of Delaware corporate law.[32]

In much the same vein, there are several reasons to think competition for privacy rules would not succumb to a race to the bottom.

First, if privacy advocates are correct, consumers put substantial pressure on companies to adopt stricter privacy policies. Simply opting in to the weakest state regime would not, as with corporate law, be a matter of substantial indifference to consumers but would (according to advocates) run contrary to their interests. If advocates are correct, firms avoiding stronger privacy laws would pay substantial costs. As a result, the impetus for states to offer weaker laws would be diminished. And, consistent with Macey and Miller’s “interest-group theory” of corporate law,[33] advocates themselves would be important constituencies vying to influence state privacy laws. Satisfying these advocates may benefit state legislators more than satisfying corporate constituencies does.

Second, “weaker” and “stronger” would not be the only dimensions on which states would compete for firms to adopt their privacy regimes. Rather, as mentioned above, privacy law is not one-size-fits-all. Different industries and services entail different implications for consumer interests. States could compete to specialize in offering privacy regimes attractive to distinct industries based on interest groups with particular importance to their economies. Minnesota (home of the Mayo Clinic) and Ohio (home of the Cleveland Clinic), for example, may specialize in health care and medical privacy, while California specializes in social media privacy.

Third, insurance companies are unlikely to be indifferent to the law that the companies they cover choose. Indeed, to the extent that insurers require covered firms to adopt specific privacy practices to control risk, those insurers would likely relish the prospect of outsourcing the oversight of these activities to state law enforcers. States could thus compete to mimic large insurers’ privacy preferences—which would by no means map onto “weaker” policies—to induce insurers to require covered firms to adopt their laws.

If a race to the bottom is truly a concern, the federal government could offer a 51st privacy alternative (that is, an optional federal regime as an alternative to the states’ various privacy laws). Assuming federal privacy regulation would be stricter (an assumption inherent in the race-to-the-bottom objection to state competition), such an approach would ensure that at least one sufficiently strong opt-in privacy regime would always be available. Among other things, this would preclude firms from claiming that no option offers a privacy regime stronger than those of the states trapped in the (alleged) race to the bottom.

Choice of law exists to a degree in the European Union, a trading bloc commonly regarded as uniformly regulated (and commonly regarded as superior on privacy because of a bias toward privacy over other goods). The General Data Protection Regulation (GDPR) gives EU member states broad authority to derogate from its provisions and create state-level exemptions. Article 23 of the GDPR allows states to exempt themselves from EU-wide law to safeguard nine listed broad governmental and public interests.[34] And Articles 85 through 91 provide for derogations, exemptions, and powers to impose additional requirements relative to the GDPR for a number of “specific data processing situations.”[35]

Finally, Article 56 establishes a “lead supervisory authority” for each business.[36] In the political, negotiated processes under the GDPR, this effectively allows companies to shade their regulatory obligations and enforcement outlook through their choices of location. For the United States’ sharper rule-of-law environment, we argue that the choice of law should be articulate and clear.

Refining the Privacy Choice-of-Law Proposal

The precise contours of a federal statute protecting choice-of-law terms in contracts will determine whether it successfully promotes interfirm and interstate competition. Language will also determine its political salability.

Questions include: What kind of notice, if any, should be required to make consumers aware that they are dealing with a firm under a law regime not their own? Consumers are notoriously unwilling to investigate privacy terms—or any other contract terms—in advance, and when considering the choice of law, they would probably not articulate it to themselves. But the competitive dynamics described earlier would probably communicate relevant information to consumers even without any required notice. As always, competitors will have an incentive to ensure consumers are appropriately well-informed when they can diminish their rivals or elevate themselves in comparison by doing so.[37]

Would there be limits on which state’s laws a firm could choose? For example, could a company choose the law of a state where neither the company nor the consumer is domiciled? States would certainly argue that a company should not be able to opt out of the law of the state where it is domiciled. The federal legislation we propose would allow unlimited choice. Such a choice is important if the true benefits of jurisdictional competition are to be realized.

A federal statute requiring states to enforce choice-of-law terms should not override state law denying enforcement of choice-of-law terms that are oppressive, unfair, or improperly bargained for. In cases such as Carnival Cruise Lines v. Shute[38] and The Bremen v. Zapata Off-Shore Co.,[39] the Supreme Court has considered whether forum-selection clauses in contracts might be invalid. The Court has generally upheld such clauses, but they can be oppressive if they require plaintiffs in Maine to litigate in Hawaii, for example, without a substantial reason why Hawaii courts are the appropriate forum. Choice-of-law terms do not impose the cost of travel to remote locations, but they could be used not to establish the law governing the parties but rather to create a strategic advantage unrelated to the law in litigation. Deception built into a contract’s choice-of-law terms should remain grounds for invalidating the contract under state law, even if the state is precluded from barring choice-of-law terms by statute.

The race-to-the-bottom argument raises the question of whether impeding states from overriding contractual choice-of-law provisions would be harmful to state interests, especially since privacy law concerns consumer rights. However, there are reasons to believe race-to-the-bottom incentives would be tempered by greater legal specialization and certainty and by state courts’ ability to refuse to enforce choice-of-law clauses in certain limited circumstances. As Erin O’Hara and Ribstein put it:

Choice-of law clauses reduce uncertainty about the parties’ legal rights and obligations and enable firms to operate in many places without being subject to multiple states’ laws. These reduced costs may increase the number of profitable transactions and thereby increase social wealth. Also, the clauses may not change the results of many cases because courts in states that prohibit a contract term might apply the more lenient law of a state that has close connections with the parties even without a choice-of-law clause.[40]

Determining when, exactly, a state court can refuse to enforce a firm’s choice of privacy law because of excessive leniency is tricky, but the federal statute could set out a framework for when a court could apply its own state’s law. Much like the independent federal alternative discussed above, specific minimum requirements in the federal law could ensure that any race to the bottom that does occur can go only so far. Of course, it would be essential that any such substantive federal requirements be strictly limited, or else the benefits of jurisdictional competition would be lost.

The converse to the problem of a race to the bottom resulting from state competition is the “California effect”—the prospect of states adopting onerous laws from which no company (or consumer) can opt out. States can regulate nationally through one small tendril of authority: the power to prevent businesses and consumers from agreeing on the law that governs their relationships. If a state regulates in a way that it thinks will be disfavored, it will bar choice-of-law provisions in contracts so consumers and businesses cannot exercise their preference.

Utah’s Social Media Regulation Act, for example, includes mandatory age verification for all social media users,[41] because companies must collect proof that consumers are either of age or not in Utah. To prevent consumers and businesses from avoiding this onerous requirement, Utah bars waivers of the law’s requirements “notwithstanding any contract or choice-of-law provision in a contract.”[42] If parties could choose their law, that would render Utah’s law irrelevant, so Utah cuts off that avenue. This demonstrates the value of a proposal like the one contemplated here.

Proposed Legislation

Creating a federal policy to stop national regulation coming from state capitols, while still preserving competition among states and firms, is unique. Congress usually creates its own policy and preempts states in that area to varying degrees. There is a well-developed law around this type of preemption, which is sometimes implied and sometimes expressed in statute.[43] Our proposal does not operate that way. It merely withdraws state authority to prevent parties from freely contracting about the law that applies to them.

A second minor challenge exists regarding the subject matter about which states may not regulate choice of law. Barring states from regulating choice of law entirely is an option, but if the focus is on privacy only, the preemption must be couched to allow regulation of choice of law in other areas. Thus, the scope of “privacy” must be in the language.

Finally, the withdrawal of state authority should probably be limited to positive enactments, such as statutes and regulations, leaving intact common-law practice related to choice-of-law provisions.[44] “Statute,” “enactment,” and “provision” are preferable in preemptive language to “law,” which is ambiguous.

These challenges, and possibly more, are tentatively addressed in the following first crack at statutory language, inspired by several preemptive federal statutes, including the Employee Retirement Income Security Act of 1974,[45] the Airline Deregulation Act,[46] the Federal Aviation Administration Authorization Act of 1994,[47] and the Federal Railroad Safety Act.[48]

A state, political subdivision of a state, or political authority of at least two states may not enact or enforce any statute, regulation, or other provision barring the adoption or application of any contractual choice-of-law provision to the extent it affects contract terms governing commercial collection, processing, security, or use of personal information.

Conclusion

This report introduces a statutory privacy framework centered on individual states and consistent with the United States’ constitutional design. But it safeguards companies from the challenge created by the intersection of that design and the development of modern commerce and communication, which may require them to navigate the complexities and inefficiencies of serving multiple regulators. It fosters an environment conducive to jurisdictional competition and experimentation.

We believe giving states the chance to compete under this approach should be explored in lieu of consolidating privacy law in the hands of one central federal regulator. Competition among states to provide optimal legislation and among businesses to provide optimal privacy policies will help discover and deliver on consumers’ interests, including privacy, of course, but also interactivity, convenience, low costs, and more.

Consumers’ diverse interests are not known now, and they cannot be predicted reliably for the undoubtedly interesting technological future. Thus, it is important to have a system for discovering consumers’ interests in privacy and the regulatory environments that best help businesses serve consumers. It is unlikely that a federal regulatory regime can do these things. The federal government could offer a 51st option in such a system, of course, so advocates for federal involvement could see their approach tested alongside the states’ approaches.

[1] See Uniform Law Commission, “What Is a Model Act?,” https://www.uniformlaws.org/acts/overview/modelacts.

[2] 740 Ill. Comp. Stat. 14/15 (2008).

[3] See Jim Harper, Privacy and the Four Categories of Information Technology, American Enterprise Institute, May 26, 2020, https://www.aei.org/research-products/report/privacy-and-the-four-categories-of-information-technology.

[4] See Jim Harper, “What Do People Mean by ‘Privacy,’ and How Do They Prioritize Among Privacy Values? Preliminary Results,” American Enterprise Institute, March 18, 2022, https://www.aei.org/research-products/report/what-do-people-mean-by-privacy-and-how-do-they-prioritize-among-privacy-values-preliminary-results.

[5] Gramm-Leach-Bliley Act, 15 U.S.C. 6801, § 501 et seq.

[6] Health Insurance Portability and Accountability Act of 1996, Pub. L. No. 104-191, § 264.

[7] Estelle Masse, quoted in Ashleigh Hollowell, “Is Privacy Only for the Elite? Why Apple’s Approach Is a Marketing Advantage,” VentureBeat, October 18, 2022, https://venturebeat.com/security/is-privacy-only-for-the-elite-why-apples-approach-is-a-marketing-advantage.

[8] Competition among firms regarding privacy is common, particularly in digital markets. Notably, Apple has implemented stronger privacy protections than most of its competitors have, particularly with its App Tracking Transparency framework in 2021. See, for example, Brain X. Chen, “To Be Tracked or Not? Apple Is Now Giving Us the Choice,” New York Times, April 26, 2021, https://www.nytimes.com/2021/04/26/technology/personaltech/apple-app-tracking-transparency.html. For Apple, this approach is built into the design of its products and offers what it considers a competitive advantage: “Because Apple designs both the iPhone and processors that offer heavy-duty processing power at low energy usage, it’s best poised to offer an alternative vision to Android developer Google which has essentially built its business around internet services.” Kif Leswing, “Apple Is Turning Privacy into a Business Advantage, Not Just a Marketing Slogan,” CNBC, June 8, 2021, https://www.cnbc.com/2021/06/07/apple-is-turning-privacy-into-a-business-advantage.html. Apple has built a substantial marketing campaign around these privacy differentiators, including its ubiquitous “Privacy. That’s Apple.” slogan. See Apple, “Privacy,” https://www.apple.com/privacy. Similarly, “Some of the world’s biggest brands (including Unilever, AB InBev, Diageo, Ferrero, Ikea, L’Oréal, Mars, Mastercard, P&G, Shell, Unilever and Visa) are focusing on taking an ethical and privacy-centered approach to data, particularly in the digital marketing and advertising context.” Rachel Dulberg, “Why the World’s Biggest Brands Care About Privacy,” Medium, September 14, 2021, https://uxdesign.cc/who-cares-about-privacy-ed6d832156dd.

[9] New State Ice Co. v. Liebmann, 285 US 262, 311 (1932) (Brandeis, J., dissenting) (“To stay experimentation in things social and economic is a grave responsibility. Denial of the right to experiment may be fraught with serious consequences to the Nation. It is one of the happy incidents of the federal system that a single courageous State may, if its citizens choose, serve as a laboratory; and try novel social and economic experiments without risk to the rest of the country.”).

[10] See Charles M. Tiebout, “A Pure Theory of Local Expenditures,” Journal of Political Economy 64, no. 5 (1956): 416–24, https://www.jstor.org/stable/1826343.

[11] See, for example, Barry R. Weingast, “The Economic Role of Political Institutions: Market-Preserving Federalism and Economic Development,” Journal of Law, Economics, & Organization 11, no. 1 (April 1995): 1 31, https://www.jstor.org/stable/765068; Yingyi Qian and Barry R. Weingast, “Federalism as a Commitment to Preserving Market Incentives,” Journal of Economic Perspectives 11, no. 4 (Fall 1997): 83–92, https://www.jstor.org/stable/2138464; and Rui J. P. de Figueiredo Jr. and Barry R. Weingast, “Self-Enforcing Federalism,” Journal of Law, Economics, & Organization 21, no. 1 (April 2005): 103–35, https://www.jstor.org/stable/3554986.

[12] See US Const. art. I, § 8 (enumerating the powers of the federal Congress).

[13] See generally Randy E. Barnett, Restoring the Lost Constitution: The Presumption of Liberty (Princeton, NJ: Princeton University Press, 2014), 274–318.

[14] Protection for Private Blocking and Screening of Offensive Material, 47 U.S.C. 230.

[15] See Geoffrey A. Manne, Ben Sperry, and Kristian Stout, “Who Moderates the Moderators? A Law & Economics Approach to Holding Online Platforms Accountable Without Destroying the Internet,” Rutgers Computer & Technology Law Journal 49, no. 1 (2022): 39–53, https://laweconcenter.org/wp-content/uploads/2021/11/Stout-Article-Final.pdf (detailing some of the history of how Section 230 immunity expanded and differs from First Amendment protections); Meghan Anand et al., “All the Ways Congress Wants to Change Section 230,” Slate, August 30, 2023, https://slate.com/technology/2021/03/section-230 reform-legislative-tracker.html (tracking every proposal to amend or repeal Section 230); and Technology & Marketing Law Blog, website, https://blog.ericgoldman.org (tracking all Section 230 cases with commentary).

[16] Fair Credit Reporting Act, 15 U.S.C. § 1681 et seq.

[17] See US Federal Trade Commission, Fair Credit Reporting Act: 15 U.S.C. § 1681, May 2023, https://www.ftc.gov/system/files/ftc_gov/pdf/fcra-may2023-508.pdf (detailing changes to the Fair Credit Reporting Act and its regulations over time).

[18] US Federal Reserve System, Consumer Financial Protection Bureau, “CFPB Launches Inquiry into the Business Practices of Data Brokers,” press release, May 15, 2023, https://www.consumerfinance.gov/about-us/newsroom/cfpb-launches-inquiry-into-the-business-practices-of-data-brokers.

[19] US Federal Reserve System, Consumer Financial Protection Bureau, List of Consumer Reporting Companies, 2021, 8, https://files.consumerfinance.gov/f/documents/cfpb_consumer-reporting-companies-list_03-2021.pdf (noting there are “three big nationwide providers of consumer reports”).

[20] See, for example, Erin A. O’Hara and Larry E. Ribstein, The Law Market (Oxford, UK: Oxford University Press, 2009); Erin A. O’Hara O’Connor and Larry E. Ribstein, “Conflict of Laws and Choice of Law,” in Procedural Law and Economics, ed. Chris William Sanchirico (Northampton, MA: Edward Elgar Publishing, 2012), in Encyclopedia of Law and Economics, 2nd ed., ed. Gerrit De Geest (Northampton, MA: Edward Elgar Publishing, 2009); and Bruce H. Kobayashi and Larry E. Ribstein, eds., Economics of Federalism (Northampton, MA: Edward Elgar Publishing, 2007).

[21] See F. A. Hayek, “The Use of Knowledge in Society,” American Economic Review 35, no. 4 (September 1945): 519–30, https://www.jstor.org/stable/1809376?seq=12.

[22] Henry N. Butler and Larry E. Ribstein, “Legal Process for Fostering Innovation” (working paper, George Mason University, Antonin Scalia Law School, Fairfax, VA), 2, https://masonlec.org/site/rte_uploads/files/Butler-Ribstein-Entrepreneurship-LER.pdf.

[23] See Henry N. Butler and Larry E. Ribstein, “The Single-License Solution,” Regulation 31, no. 4 (Winter 2008–09): 36–42, https://papers.ssrn.com/sol3/papers.cfm?abstract_id=1345900.

[24] See Uniform Law Commission, “Acts Overview,” https://www.uniformlaws.org/acts/overview.

[25] Utah Code Ann. § 13-63-101 et seq. (2023).

[26] O’Hara and Ribstein, The Law Market, 5.

[27] O’Hara and Ribstein, The Law Market, 5.

[28] O’Hara and Ribstein, The Law Market, 5.

[29] See Christiano Lima-Strong, “The U.S.’s Sixth State Privacy Law Is Too ‘Weak,’ Advocates Say,” Washington Post, March 30, 2023, https://www.washingtonpost.com/politics/2023/03/30/uss-sixth-state-privacy-law-is-too-weak-advocates-say.

[30] See, for example, William L. Cary, “Federalism and Corporate Law: Reflections upon Delaware,” Yale Law Journal 83, no. 4 (March 1974): 663–705, https://openyls.law.yale.edu/bitstream/handle/20.500.13051/15589/33_83YaleLJ663_1973_1974_.pdf (arguing Delaware could export the costs of inefficiently lax regulation through the dominance of its incorporation statute).

[31] Jonathan R. Macey and Geoffrey P. Miller, “Toward an Interest-Group Theory of Delaware Corporate Law,” Texas Law Review 65, no. 3 (February 1987): 470, https://openyls.law.yale.edu/bitstream/handle/20.500.13051/1029/Toward_An_Interest_Group_Theory_of_Delaware_Corporate_Law.pdf. See also Daniel R. Fischel, “The ‘Race to the Bottom’ Revisited: Reflections on Recent Developments in Delaware’s Corporation Law,” Northwestern University Law Review 76, no. 6 (1982): 913–45, https://chicagounbound.uchicago.edu/cgi/viewcontent.cgi?referer=&httpsredir=1&article=2409&context=journal_articles.

[32] Macey and Miller, “Toward an Interest-Group Theory of Delaware Corporate Law.”

[33] Macey and Miller, “Toward an Interest-Group Theory of Delaware Corporate Law.”

[34] Commission Regulation 2016/679, General Data Protection Regulation art. 23.

[35] Commission Regulation 2016/679, General Data Protection Regulation art. 85–91.

[36] Commission Regulation 2016/679, General Data Protection Regulation art. 56.

[37] See the discussion in endnote 8.

[38] Carnival Cruise Lines v. Shute, 499 US 585 (1991).

[39] The Bremen v. Zapata, 407 US 1 (1972).

[40] O’Hara and Ribstein, The Law Market, 8.

[41] See Jim Harper, “Perspective: Utah’s Social Media Legislation May Fail, but It’s Still Good for America,” Deseret News, April 6, 2023, https://www.aei.org/op-eds/utahs-social-media-legislation-may-fail-but-its-still-good-for-america.

[42] Utah Code Ann. § 13-63-401 (2023).

[43] See Bryan L. Adkins, Alexander H. Pepper, and Jay B. Sykes, Federal Preemption: A Legal Primer, Congressional Research Service, May 18, 2023, https://sgp.fas.org/crs/misc/R45825.pdf.

[44] Congress should not interfere with interpretation of choice-of-law provisions. These issues are discussed in Tanya J. Monestier, “The Scope of Generic Choice of Law Clauses,” UC Davis Law Review 56, no. 3 (February 2023): 959–1018, https://digitalcommons.law.buffalo.edu/cgi/viewcontent.cgi?article=2148&context=journal_articles.

[45] Employee Retirement Income Security Act of 1974, 29 U.S.C. § 1144(a).

[46] Airline Deregulation Act, 49 U.S.C. § 41713(b).

[47] Federal Aviation Administration Authorization Act of 1994, 49 U.S.C. § 14501.

[48] Federal Railroad Safety Act, 49 U.S.C. § 20106.